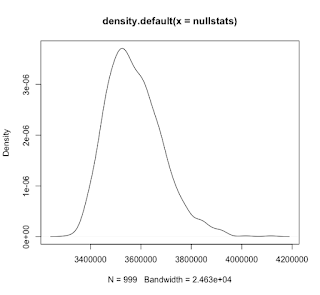

Theoretical numbers to superimpose on histogram

If you want superimpose the theoretical numbers at the top of an histogram based on a fitted distribution (for example, using fitdistrplus()), it is not so easy. Here is a generic function for such a purpose. It is integrated in my package HelpersMG : modeled.hist <- function(breaks, FUN, ..., sum=1) { # breaks is a vector with the breaks; it can be obtained directly from hist() # FUN is the function to integrate the density, ex. pnorm # ... are the parameters of pfun # sum is the total numbers in the histogram; 1 for emperical frequencies by <- breaks[2]-breaks[1] xp <- c(breaks, tail(breaks, 1)+by) par.fun <- list(...) par.fun <- modifyList(list(log.p=FALSE, q=xp, lower.tail=TRUE), par.fun) s <- do.call(FUN, par.fun) # s <- pfun(xp, par.fun[1], par.fun[2], log=FALSE) s <- head(c(s[-1...